In this post, I’ll cover in detail all the steps necessary to integrate Apache Zeppelin, Apache Spark and Apache Cassandra.

For the remaining of this post, Zeppelin == Apache Zeppelin™, Spark == Apache Spark™ and Cassandra == Apache Cassandra™

If you are not familiar with Zeppelin, I recommend reading my introduction slides here

The integration between Spark and Cassandra is achieved using the Spark-Cassandra connector.

Natively Zeppelin does support Spark out of the box. But making Zeppelin supporting the Spark-Cassandra integration requires some extra work.

Zeppelin – Spark workflow

With Zeppelin, any interpreter is executed in a separated JVM and it does apply to the Spark interpreter too.

The interpreter is first launched in the class RemoteInterpreterProcess:

public int reference(InterpreterGroup interpreterGroup) {

...

if (!isInterpreterAlreadyExecuting) {

try {

port = RemoteInterpreterUtils.findRandomAvailablePortOnAllLocalInterfaces();

} catch (IOException e1) {

throw new InterpreterException(e1);

}

CommandLine cmdLine = CommandLine.parse(interpreterRunner);

cmdLine.addArgument("-d", false);

cmdLine.addArgument(interpreterDir, false);

cmdLine.addArgument("-p", false);

cmdLine.addArgument(Integer.toString(port), false);

cmdLine.addArgument("-l", false);

cmdLine.addArgument(localRepoDir, false);

executor = new DefaultExecutor();

watchdog = new ExecuteWatchdog(ExecuteWatchdog.INFINITE_TIMEOUT);

executor.setWatchdog(watchdog);

running = true;

...

Indeed each interpreter is bootstrapped using the interpreterRunner which is the shell script $ZEPPELIN_HOME/bin/interpreter.sh

Depending on the interpreter type and run mode, the execution is launched with a different set of environment. Extract of the $ZEPPELIN_HOME/bin/interpreter.sh script:

if [[ -n "${SPARK_SUBMIT}" ]]; then

${SPARK_SUBMIT} --class ${ZEPPELIN_SERVER} --driver-class-path "${ZEPPELIN_CLASSPATH_OVERRIDES}:${CLASSPATH}" --driver-java-options "${JAVA_INTP_OPTS}" ${SPARK_SUBMIT_OPTIONS} ${SPARK_APP_JAR} ${PORT} &

else

${ZEPPELIN_RUNNER} ${JAVA_INTP_OPTS} ${ZEPPELIN_INTP_MEM} -cp ${ZEPPELIN_CLASSPATH_OVERRIDES}:${CLASSPATH} ${ZEPPELIN_SERVER} ${PORT} &

fi

There is a small detail here that is critical for the integration of the Spark-Cassandra connector, which is the classpath used to launch the interpreter process. The idea is to include the Spark-Cassandra connector dependencies in this classpath so that Zeppelin can access Cassandra using Spark

Configuration matrix

There are many parameters and configurations to run Zeppelin with Spark and Cassandra:

- Standard Zeppelin binaries

- Custom Zeppelin build with the Spark-Cassandra connector

- Zeppelin connecting to the local Spark runner

- Zeppelin connecting to a stand-alone Spark cluster

- Using Zeppelin with OSS Spark

- Using Zeppelin with DSE (Datastax Enterprise)

Standard Zeppelin build with local Spark

If you are using the default Zeppelin binaries (downloaded from the official repo), to make the Spark-Cassandra integration work, you would have to

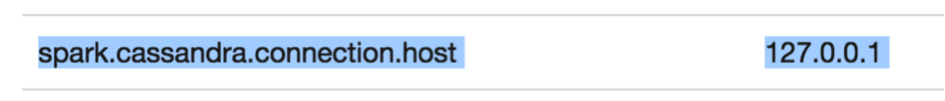

- In the Interpreter menu, add the property spark.cassandra.connection.host to the Spark interpreter. The value should point to a single or a list of IP addresses of your Cassandra cluster

spark_cassandra_connection_host

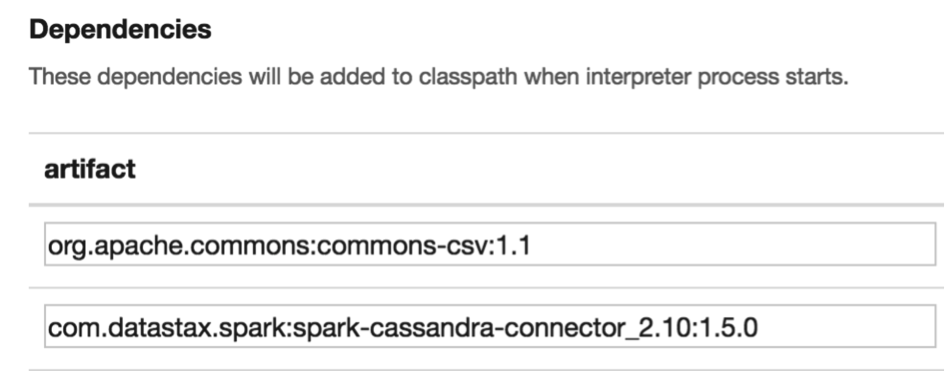

- last but not least, you’d have to add also the Spark-Cassandra connector as dependency to the interpreter

spark_cassandra_dependencies

when adding the dependency and the property, do not forget to click on the + icon to force Zeppelin to add your change otherwise it will be lost

What happens at runtime is Zeppelin will download the declared dependencie(s) and all its transitive dependencie(s) from Maven central and/or from your local Maven repository (if any).

Those dependencies will then be stored inside the local repository folder defined by the property: zeppelin.dep.localrepo.

Also, if you go back to the interpreter configuration menu (after a successful run), you’ll see a new property added by Zeppelin: zeppelin.interpreter.localRepo

interpreter_repo

The last string in the folder (2BTPVTBVH in the example) is the id of the interpreter instance. All transitive dependencies are downloaded and stored as jar files inside $ZEPPELIN_HOME/local-repo/<INTERPRETER_ID> and their content (.class files) is extracted into $ZEPPELIN_HOME/local-repo

If your Zeppelin server is behind corporate firewall, the download will fail so Spark won’t be able to connect to Cassandra (you’ll get a ClassNotFoundException in the Spark interpreter logs).

The solution in this case is:

- either download manually all the dependencies and put them into the folder zeppelin.dep.localrepo

- or build Zeppelin with the Spark-Cassandra connector integrated (see right after)

Custom Zeppelin build with local Spark

You’ll need to build Zeppelin yourself, using one of the available Maven profiles cassandra-spark-1.x to get the correct Spark version.

Those profiles are defined in the $ZEPPELIN_HOME/spark-dependencies/pom.xml file.

For each cassandra-spark-1.x, you can override the defined Spark version using the -Dspark.version=x.y.z flag for the build. To change the Spark-Cassandra connector version, you’ll need to edit the $ZEPPELIN_HOME/spark-dependencies/pom.xml file yourself. Similarly if you want to use the latest version of the Spark-Cassandra connector and a profile does not exist, just edit the file and add your own profile.

In a nutshell, the build command is

>mvn clean package -Pbuild-distr -Pcassandra-spark-1.x -DskipTests

or with the Spark version manually forced:

>mvn clean package -Pbuild-distr -Pcassandra-spark-1.x -Dspark.version=x.y.z -DskipTests

This will force Zeppelin to add all transitive dependencies for the Spark-Cassandra connector into the big/fat jar file located in $ZEPPELIN_HOME/interpreter/spark/dep/zeppelin-spark-dependencies-<ZEPPELIN_VERSION>.jar

One easy way to verify that the Spark-Cassandra connector has been correctly embedded into this file is to copy it somewhere and extract its content to check using the command jar -xvf zeppelin-spark-dependencies-<ZEPPELIN_VERSION>.jar

Once built, you can use this special version of Zeppelin without declaring any dependency to the Spark-Cassandra connector. You still have to set the spark.cassandra.connection.host property on the Spark interpreter

Zeppelin connecting to a stand-alone OSS Spark cluster

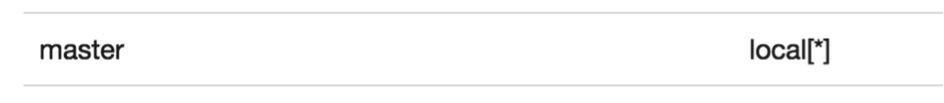

Until now, we have supposed that you are using the local Spark mode of Zeppelin (master = local[*]). In this section, we want Zeppelin to connect to an existing stand-alone Spark cluster (Spark running on Yarn and Mesos is not covered here because it is recommended to run Spark in stand-alone mode with Cassandra to benefit from data-locality).

First, you’ll need to set the Spark master property for the Spark interpreter. Instead of local[*], put a real address like spark://x.y.z:7077.

spark_master_url

The extract of the shell script from the first section showed that Zeppelin will invoke the spark-submit command, passing its own Spark jar with all the transitive dependencies using the parameter --driver-class-path.

But where does Zeppelin fetches all the dependencies jar ? From the local repository seen earlier !!!

As a consequence, if you add the Spark-Cassandra connector as dependency (standard Zeppelin build) and you run against a stand-alone Spark cluster, it will fail because the local repository will be empty!!!. Run first a simple Spark job in local Spark mode to let Zeppelin a chance to download the dependencies before switching to the stand-alone Spark

But it’s not sufficient, on your stand-alone Spark cluster, you must also add the Spark-Cassandra connector dependencies into Spark classpath so that the workers can connect to Cassandra.

How do to that ?

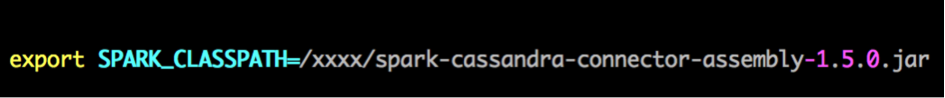

- edit

$SPARK_HOME/conf/spark-env.shfile and add the Spark-Cassandra dependencies to the SPARK_CLASSPATH variable.

spark_classpath

As you can see, it’s not just the simple spark-cassandra connector jar we need but the assembly jar e.g. the fat jar which includes all transitive dependencies.

To get this jar, you’ll have to build it yourself:

git clone https://github.com/datastax/spark-cassandra-connector/sbt assembly

- another alternative is to execute the

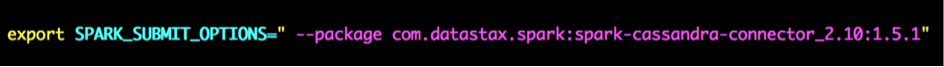

spark-submitcommand with the--package com.datastax.spark:spark-cassandra-connector_2.10:<connector_version>flag. In this case, Spark is clever enough to fetch all the transitive dependencies for you from a remote repository.

The same warning about corporate firewall applies here.

How would you add this extra

--packageflag to Zeppelinspark-submit? By exporting the SPARK_SUBMIT_OPTIONS environment variable in$ZEPPELIN_HOME/conf/zeppelin-env.sh

spark_submit_options

The solution of using the --package flag seems easy but not suitable for a recurrent Spark job because it will force Spark to download all the dependencies.

If your Spark job is not a one-shot job, I would recommend building the assembly jar for the Spark-Cassandra connector and set it in the SPARK_CLASSPATH variable so that is it available for all of your Spark jobs.

I have pre-built some assembly jars (using Scala 2.10) you can download here

Zeppelin connecting to a stand-alone Datastax Enterprise cluster

Instead of using an open-source Spark, using Datastax Enterprise (DSE) makes your life easier because all the dependencies of the Spark-Cassandra connector are included by default in the build of Spark. So there is neither SPARK_CLASSPATH variable to set nor --package flag to manage on Zeppelin side.

But you’ll still need to either declare the Spark-Cassandra connector dependency on Zeppelin side or build Zeppelin with the connector embedded.

Pay attention if you want to build Zeppelin for DSE because each version of DSE 4.8.x is using a custom Spark version and Hadoop 1 dependencies.

Zeppelin custom builds

To make your life easier, I have created a list of custom Zeppelin builds for each version of OSS Spark/DSE. All the zeppelin custom builds are located in the shared Google drive folder.

The custom Maven pom file spark-dependencies-pon.xml used for building those versions is provided as a reference

| Zeppelin version | Spark version/DSE version | Spark-Cassandra connector version | Tarball |

|---|---|---|---|

| 0.6.0 | Spark 1.4.0 | 1.4.4 | zeppelin-0.6.0-cassandra-spark-1.4.0.tar.gz |

| 0.6.0 | Spark 1.4.1 | 1.4.4 | zeppelin-0.6.0-cassandra-spark-1.4.1.tar.gz |

| 0.6.0 | Spark 1.5.0 | 1.5.1 | zeppelin-0.6.0-cassandra-spark-1.5.0.tar.gz |

| 0.6.0 | Spark 1.5.1 | 1.5.1 | zeppelin-0.6.0-cassandra-spark-1.5.1.tar.gz |

| 0.6.0 | Spark 1.5.2 | 1.5.1 | zeppelin-0.6.0-cassandra-spark-1.5.2.tar.gz |

| 0.6.0 | Spark 1.6.0 | 1.6.0 | zeppelin-0.6.0-cassandra-spark-1.6.0.tar.gz |

| 0.6.0 | Spark 1.6.1 | 1.6.0 | zeppelin-0.6.0-cassandra-spark-1.6.1.tar.gz |

| 0.6.0 | Spark 1.6.2 | 1.6.0 | zeppelin-0.6.0-cassandra-spark-1.6.2.tar.gz |

| 0.6.0 | DSE 4.8.3, DSE 4.8.4 (Spark 1.4.1) | 1.4.1 | zeppelin-0.6.0-dse-4.8.3-4.8.4.tar.gz |

| 0.6.0 | DSE 4.8.5, DSE 4.8.6 (Spark 1.4.1) | 1.4.2 | zeppelin-0.6.0-dse-4.8.5-4.8.6.tar.gz |

| 0.6.0 | DSE 4.8.7 (Spark 1.4.1) | 1.4.3 | zeppelin-0.6.0-dse-4.8.7.tar.gz |

| 0.6.0 | DSE 4.8.8, DSE 4.8.9 (Spark 1.4.1) | 1.4.4 | zeppelin-0.6.0-dse-4.8.8-4.8.9.tar.gz |

| 0.6.0 | DSE 5.0.0, DSE 5.0.1 (Spark 1.6.1) | 1.6.0 | zeppelin-0.6.0-dse-5.0.0-5.0.1.tar.gz |

| 0.6.1 | DSE 5.0.2, DSE 5.0.3 (Spark 1.6.2) | 1.6.0 | zeppelin-0.6.1-dse-5.0.2-5.0.3.tar.gz |

| 0.7.0 | DSE 5.0.4 (Spark 1.6.2) | 1.6.2 | zeppelin-0.7.0-DSE-5.0.4.tar.gz |

| 0.7.0 | DSE 5.0.5 (Spark 1.6.2) | 1.6.3 | zeppelin-0.7.0-DSE-5.0.4.tar.gz |

| 0.7.0 | DSE 5.0.6 (Spark 1.6.3) | 1.6.4 | zeppelin-0.7.0-DSE-5.0.6.tar.gz |

| 0.7.1 | DSE 5.1.0 (Spark 2.0.2) | 2.0.1 | zeppelin-0.7.1-dse-5.1.0.tar.gz |

| 0.7.1 | DSE 5.1.1 (Spark 2.0.2) | 2.0.2 | zeppelin-0.7.1-dse-5.1.1.tar.gz |

Hey I was trying to configure Zeppelin to run on DSE 5.0.1, but when I run:

import com.datastax.spark.connector._

val rdd = sc.cassandraTable(“test”,”words”)

It throws following error:

import com.datastax.spark.connector._

java.lang.NoClassDefFoundError: com/datastax/spark/connector/cql/CassandraConnector$

at com.datastax.spark.connector.SparkContextFunctions.cassandraTable$default$3(SparkContextFunctions.scala:52)

… 58 elided

I also passed follwing dependencies in spark interpreter:

/usr/share/dse/spark/lib/spark-cassandra-connector_2.10-1.6.0.jar — as an artifact

Did you download my custom build for DSE 5.0.1 (zeppelin-0.6.0-dse-5.0.0-5.0.1.tar.gz) ?

Nope, I downloaded version 0.6.1 from https://zeppelin.apache.org/download.html. Would you mind sharing link to your custom build repo?

Thank you!

The link is in the blog post above …

https://drive.google.com/folderview?id=0B6wR2aj4Cb6wQ01aR3ItR0xUNms

I was unable to get your custom build to run properly zeppelin process kept dying. However, I was able to recreate zeppelin-spark-dependecies jar and replace the jar in the original zeppelin spark/dep directory. Thank you very much for the guidance in your blog!

Hi Doan,

I am using your custom build 0.61 with dse 5.03. It works great.

Zeppile 0.62 is out and I am wondering how often do you create a new Zeppile version with dse?

Thanks,

Steven

I’ll update the version matrix with latest DSE/Zeppelin releases.

For old versions, if people need using Zeppelin 0.6.2 with DSE 4.8.x for example, they’ll have to ask me for a one-off build.

Hi Doan,

I am using Cassandra Community Edition and version is 3.0.6 and Zeppelin 0.6.1 from official site.

Is there any guide for this situation?

I input correct info into Cassandra Interpreter but got an exception:

java.lang.IllegalArgumentException: Repository: cannot add mbean for pattern name SkyAid C* Cluster v3.1-metrics:name=”read-timeouts”

Our cluster name is SkyAid C* Cluster v3.1

Thanks for your post!

Wenjun

Can you copy paste the full exception stack trace ? Look into $ZEPPELIN_HOME/logs/xxx-xxx-cassandra-interpreter.log file

Hi Doan,

Thanks for your help.

I upload log file to my website:

http://www.flyml.net/tmp/zeppelin-interpreter-cassandra-user-skyaid-monitor.log

thanks a log!

Wenjun

“Repository: cannot add mbean for pattern name SkyAid C* Cluster v3.1-metrics:name=”read-timeouts”

This means that your cluster name “SkyAid C* Cluster v3.1” has invalid character for JMX bean name. Probably the * character should be removed

Oh~ That’s a trouble, I think it is very hard to change the production cluster’s cluster name.

I think I can build up another demo cluster to verify this.

Thanks a lot for your help!

Hi Doan,

I was able to get this to work with spark but not with pyspark. If I try

>>> %pyspark

>>> sc

I get:

Traceback (most recent call last):

File “/tmp/zeppelin_pyspark-504532695197216693.py”, line 20, in

from py4j.java_gateway import java_import, JavaGateway, GatewayClient

ImportError: No module named py4j.java_gateway

pyspark is not responding

Is there a simple fix to that?

Thanks,

Alessandro

Get me the log in $ZEPPELIN_HOME/logs/xxxx-interpreter-spark-xxx.log

INFO [2016-12-19 22:04:57,251] ({Thread-0} RemoteInterpreterServer.java[run]:81) – Starting remote interpreter server on port 40254

INFO [2016-12-19 22:04:57,819] ({pool-1-thread-2} RemoteInterpreterServer.java[createInterpreter]:169) – Instantiate interpreter org.apache.zeppelin.spark.PySparkInterpreter

INFO [2016-12-19 22:04:57,905] ({pool-1-thread-2} RemoteInterpreterServer.java[createInterpreter]:169) – Instantiate interpreter org.apache.zeppelin.spark.SparkInterpreter

INFO [2016-12-19 22:04:57,910] ({pool-1-thread-2} RemoteInterpreterServer.java[createInterpreter]:169) – Instantiate interpreter org.apache.zeppelin.spark.SparkSqlInterpreter

INFO [2016-12-19 22:04:57,915] ({pool-1-thread-2} RemoteInterpreterServer.java[createInterpreter]:169) – Instantiate interpreter org.apache.zeppelin.spark.DepInterpreter

INFO [2016-12-19 22:04:57,960] ({pool-2-thread-2} SchedulerFactory.java[jobStarted]:131) – Job remoteInterpretJob_1482185097958 started by scheduler interpreter_522907625

INFO [2016-12-19 22:04:57,969] ({pool-2-thread-2} PySparkInterpreter.java[createPythonScript]:108) – File /tmp/zeppelin_pyspark-1890278518472862349.py created

WARN [2016-12-19 22:04:59,817] ({pool-2-thread-2} NativeCodeLoader.java[]:62) – Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

INFO [2016-12-19 22:04:59,940] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Changing view acls to: ubuntu

INFO [2016-12-19 22:04:59,942] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Changing modify acls to: ubuntu

INFO [2016-12-19 22:04:59,944] ({pool-2-thread-2} Logging.scala[logInfo]:58) – SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(ubuntu); users with modify permissions: Set(ubuntu)

INFO [2016-12-19 22:05:00,468] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Starting HTTP Server

INFO [2016-12-19 22:05:00,564] ({pool-2-thread-2} Server.java[doStart]:272) – jetty-8.y.z-SNAPSHOT

INFO [2016-12-19 22:05:00,597] ({pool-2-thread-2} AbstractConnector.java[doStart]:338) – Started SocketConnector@0.0.0.0:37297

INFO [2016-12-19 22:05:00,599] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Successfully started service ‘HTTP class server’ on port 37297.

INFO [2016-12-19 22:05:02,848] ({pool-2-thread-2} SparkInterpreter.java[createSparkContext_1]:367) – —— Create new SparkContext local[*] ——-

INFO [2016-12-19 22:05:02,867] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Running Spark version 1.6.2

WARN [2016-12-19 22:05:02,886] ({pool-2-thread-2} Logging.scala[logWarning]:70) –

SPARK_CLASSPATH was detected (set to ‘:/home/ubuntu/zeppelin-0.6.1/interpreter/spark/dep/*:/home/ubuntu/zeppelin-0.6.1/interpreter/spark/*::/home/ubuntu/zeppelin-0.6.1/lib/zeppelin-interpreter-0.6.1.jar’).

This is deprecated in Spark 1.0+.

Please instead use:

– ./spark-submit with –driver-class-path to augment the driver classpath

– spark.executor.extraClassPath to augment the executor classpath

WARN [2016-12-19 22:05:02,887] ({pool-2-thread-2} Logging.scala[logWarning]:70) – Setting ‘spark.executor.extraClassPath’ to ‘:/home/ubuntu/zeppelin-0.6.1/interpreter/spark/dep/*:/home/ubuntu/zeppelin-0.6.1/interpreter/spark/*::/home/ubuntu/zeppelin-0.6.1/lib/zeppelin-interpreter-0.6.1.jar’ as a work-around.

WARN [2016-12-19 22:05:02,887] ({pool-2-thread-2} Logging.scala[logWarning]:70) – Setting ‘spark.driver.extraClassPath’ to ‘:/home/ubuntu/zeppelin-0.6.1/interpreter/spark/dep/*:/home/ubuntu/zeppelin-0.6.1/interpreter/spark/*::/home/ubuntu/zeppelin-0.6.1/lib/zeppelin-interpreter-0.6.1.jar’ as a work-around.

INFO [2016-12-19 22:05:02,907] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Changing view acls to: ubuntu

INFO [2016-12-19 22:05:02,907] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Changing modify acls to: ubuntu

INFO [2016-12-19 22:05:02,907] ({pool-2-thread-2} Logging.scala[logInfo]:58) – SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(ubuntu); users with modify permissions: Set(ubuntu)

INFO [2016-12-19 22:05:03,166] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Successfully started service ‘sparkDriver’ on port 44007.

INFO [2016-12-19 22:05:03,534] ({sparkDriverActorSystem-akka.actor.default-dispatcher-3} Slf4jLogger.scala[applyOrElse]:80) – Slf4jLogger started

INFO [2016-12-19 22:05:03,599] ({sparkDriverActorSystem-akka.actor.default-dispatcher-3} Slf4jLogger.scala[apply$mcV$sp]:74) – Starting remoting

INFO [2016-12-19 22:05:03,792] ({sparkDriverActorSystem-akka.actor.default-dispatcher-4} Slf4jLogger.scala[apply$mcV$sp]:74) – Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@172.31.30.234:41885]

INFO [2016-12-19 22:05:03,798] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Successfully started service ‘sparkDriverActorSystem’ on port 41885.

INFO [2016-12-19 22:05:03,816] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Registering MapOutputTracker

INFO [2016-12-19 22:05:03,837] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Registering BlockManagerMaster

INFO [2016-12-19 22:05:03,854] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Created local directory at /tmp/blockmgr-b5bce93f-8931-441e-9842-6dcac2badcea

INFO [2016-12-19 22:05:03,859] ({pool-2-thread-2} Logging.scala[logInfo]:58) – MemoryStore started with capacity 511.1 MB

INFO [2016-12-19 22:05:03,927] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Registering OutputCommitCoordinator

INFO [2016-12-19 22:05:04,049] ({pool-2-thread-2} Server.java[doStart]:272) – jetty-8.y.z-SNAPSHOT

INFO [2016-12-19 22:05:04,059] ({pool-2-thread-2} AbstractConnector.java[doStart]:338) – Started SelectChannelConnector@0.0.0.0:4040

INFO [2016-12-19 22:05:04,059] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Successfully started service ‘SparkUI’ on port 4040.

INFO [2016-12-19 22:05:04,061] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Started SparkUI at http://172.31.30.234:4040

INFO [2016-12-19 22:05:04,129] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Created default pool default, schedulingMode: FIFO, minShare: 0, weight: 1

INFO [2016-12-19 22:05:04,153] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Starting executor ID driver on host localhost

INFO [2016-12-19 22:05:04,160] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Using REPL class URI: http://172.31.30.234:37297

INFO [2016-12-19 22:05:04,188] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Successfully started service ‘org.apache.spark.network.netty.NettyBlockTransferService’ on port 45107.

INFO [2016-12-19 22:05:04,188] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Server created on 45107

INFO [2016-12-19 22:05:04,189] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Trying to register BlockManager

INFO [2016-12-19 22:05:04,192] ({dispatcher-event-loop-0} Logging.scala[logInfo]:58) – Registering block manager localhost:45107 with 511.1 MB RAM, BlockManagerId(driver, localhost, 45107)

INFO [2016-12-19 22:05:04,196] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Registered BlockManager

INFO [2016-12-19 22:05:04,829] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Initializing execution hive, version 1.2.1

INFO [2016-12-19 22:05:04,906] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Inspected Hadoop version: 2.7.1

INFO [2016-12-19 22:05:04,906] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Loaded org.apache.hadoop.hive.shims.Hadoop23Shims for Hadoop version 2.7.1

INFO [2016-12-19 22:05:05,582] ({pool-2-thread-2} HiveMetaStore.java[newRawStore]:589) – 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

INFO [2016-12-19 22:05:05,616] ({pool-2-thread-2} ObjectStore.java[initialize]:289) – ObjectStore, initialize called

INFO [2016-12-19 22:05:05,755] ({pool-2-thread-2} Log4JLogger.java[info]:77) – Property hive.metastore.integral.jdo.pushdown unknown – will be ignored

INFO [2016-12-19 22:05:05,756] ({pool-2-thread-2} Log4JLogger.java[info]:77) – Property datanucleus.cache.level2 unknown – will be ignored

INFO [2016-12-19 22:05:07,605] ({pool-2-thread-2} ObjectStore.java[getPMF]:370) – Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes=”Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order”

INFO [2016-12-19 22:05:08,234] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MFieldSchema” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:08,235] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MOrder” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:09,336] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MFieldSchema” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:09,337] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MOrder” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:09,639] ({pool-2-thread-2} MetaStoreDirectSql.java[]:139) – Using direct SQL, underlying DB is DERBY

INFO [2016-12-19 22:05:09,643] ({pool-2-thread-2} ObjectStore.java[setConf]:272) – Initialized ObjectStore

WARN [2016-12-19 22:05:09,755] ({pool-2-thread-2} ObjectStore.java[checkSchema]:6666) – Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

WARN [2016-12-19 22:05:09,854] ({pool-2-thread-2} ObjectStore.java[getDatabase]:568) – Failed to get database default, returning NoSuchObjectException

INFO [2016-12-19 22:05:10,064] ({pool-2-thread-2} HiveMetaStore.java[createDefaultRoles_core]:663) – Added admin role in metastore

INFO [2016-12-19 22:05:10,072] ({pool-2-thread-2} HiveMetaStore.java[createDefaultRoles_core]:672) – Added public role in metastore

INFO [2016-12-19 22:05:10,162] ({pool-2-thread-2} HiveMetaStore.java[addAdminUsers_core]:712) – No user is added in admin role, since config is empty

INFO [2016-12-19 22:05:10,254] ({pool-2-thread-2} HiveMetaStore.java[logInfo]:746) – 0: get_all_databases

INFO [2016-12-19 22:05:10,255] ({pool-2-thread-2} HiveMetaStore.java[logAuditEvent]:371) – ugi=ubuntu ip=unknown-ip-addr cmd=get_all_databases

INFO [2016-12-19 22:05:10,270] ({pool-2-thread-2} HiveMetaStore.java[logInfo]:746) – 0: get_functions: db=default pat=*

INFO [2016-12-19 22:05:10,270] ({pool-2-thread-2} HiveMetaStore.java[logAuditEvent]:371) – ugi=ubuntu ip=unknown-ip-addr cmd=get_functions: db=default pat=*

INFO [2016-12-19 22:05:10,271] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MResourceUri” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:10,518] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created HDFS directory: /tmp/hive/ubuntu

INFO [2016-12-19 22:05:10,524] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created local directory: /tmp/ubuntu

INFO [2016-12-19 22:05:10,538] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created local directory: /tmp/7ab33f4a-c752-4d88-8b81-3cb76649361e_resources

INFO [2016-12-19 22:05:10,545] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created HDFS directory: /tmp/hive/ubuntu/7ab33f4a-c752-4d88-8b81-3cb76649361e

INFO [2016-12-19 22:05:10,551] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created local directory: /tmp/ubuntu/7ab33f4a-c752-4d88-8b81-3cb76649361e

INFO [2016-12-19 22:05:10,559] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created HDFS directory: /tmp/hive/ubuntu/7ab33f4a-c752-4d88-8b81-3cb76649361e/_tmp_space.db

INFO [2016-12-19 22:05:10,647] ({pool-2-thread-2} Logging.scala[logInfo]:58) – default warehouse location is /user/hive/warehouse

INFO [2016-12-19 22:05:10,656] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Initializing HiveMetastoreConnection version 1.2.1 using Spark classes.

INFO [2016-12-19 22:05:10,669] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Inspected Hadoop version: 2.7.1

INFO [2016-12-19 22:05:10,680] ({pool-2-thread-2} Logging.scala[logInfo]:58) – Loaded org.apache.hadoop.hive.shims.Hadoop23Shims for Hadoop version 2.7.1

INFO [2016-12-19 22:05:11,203] ({pool-2-thread-2} HiveMetaStore.java[newRawStore]:589) – 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

INFO [2016-12-19 22:05:11,226] ({pool-2-thread-2} ObjectStore.java[initialize]:289) – ObjectStore, initialize called

INFO [2016-12-19 22:05:11,316] ({pool-2-thread-2} Log4JLogger.java[info]:77) – Property hive.metastore.integral.jdo.pushdown unknown – will be ignored

INFO [2016-12-19 22:05:11,316] ({pool-2-thread-2} Log4JLogger.java[info]:77) – Property datanucleus.cache.level2 unknown – will be ignored

INFO [2016-12-19 22:05:13,004] ({pool-2-thread-2} ObjectStore.java[getPMF]:370) – Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes=”Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order”

INFO [2016-12-19 22:05:13,535] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MFieldSchema” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:13,536] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MOrder” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:14,563] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MFieldSchema” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:14,563] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MOrder” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:14,767] ({pool-2-thread-2} MetaStoreDirectSql.java[]:139) – Using direct SQL, underlying DB is DERBY

INFO [2016-12-19 22:05:14,769] ({pool-2-thread-2} ObjectStore.java[setConf]:272) – Initialized ObjectStore

WARN [2016-12-19 22:05:14,863] ({pool-2-thread-2} ObjectStore.java[checkSchema]:6666) – Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

WARN [2016-12-19 22:05:14,960] ({pool-2-thread-2} ObjectStore.java[getDatabase]:568) – Failed to get database default, returning NoSuchObjectException

INFO [2016-12-19 22:05:15,058] ({pool-2-thread-2} HiveMetaStore.java[createDefaultRoles_core]:663) – Added admin role in metastore

INFO [2016-12-19 22:05:15,064] ({pool-2-thread-2} HiveMetaStore.java[createDefaultRoles_core]:672) – Added public role in metastore

INFO [2016-12-19 22:05:15,155] ({pool-2-thread-2} HiveMetaStore.java[addAdminUsers_core]:712) – No user is added in admin role, since config is empty

INFO [2016-12-19 22:05:15,227] ({pool-2-thread-2} HiveMetaStore.java[logInfo]:746) – 0: get_all_databases

INFO [2016-12-19 22:05:15,228] ({pool-2-thread-2} HiveMetaStore.java[logAuditEvent]:371) – ugi=ubuntu ip=unknown-ip-addr cmd=get_all_databases

INFO [2016-12-19 22:05:15,239] ({pool-2-thread-2} HiveMetaStore.java[logInfo]:746) – 0: get_functions: db=default pat=*

INFO [2016-12-19 22:05:15,240] ({pool-2-thread-2} HiveMetaStore.java[logAuditEvent]:371) – ugi=ubuntu ip=unknown-ip-addr cmd=get_functions: db=default pat=*

INFO [2016-12-19 22:05:15,241] ({pool-2-thread-2} Log4JLogger.java[info]:77) – The class “org.apache.hadoop.hive.metastore.model.MResourceUri” is tagged as “embedded-only” so does not have its own datastore table.

INFO [2016-12-19 22:05:15,391] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created local directory: /tmp/42135dca-bb3a-495c-b470-6694def15e9c_resources

INFO [2016-12-19 22:05:15,402] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created HDFS directory: /tmp/hive/ubuntu/42135dca-bb3a-495c-b470-6694def15e9c

INFO [2016-12-19 22:05:15,409] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created local directory: /tmp/ubuntu/42135dca-bb3a-495c-b470-6694def15e9c

INFO [2016-12-19 22:05:15,418] ({pool-2-thread-2} SessionState.java[createPath]:641) – Created HDFS directory: /tmp/hive/ubuntu/42135dca-bb3a-495c-b470-6694def15e9c/_tmp_space.db

INFO [2016-12-19 22:05:28,100] ({pool-2-thread-2} SchedulerFactory.java[jobFinished]:137) – Job remoteInterpretJob_1482185097958 finished by scheduler interpreter_522907625

INFO [2016-12-19 22:06:10,960] ({pool-2-thread-3} SchedulerFactory.java[jobStarted]:131) – Job remoteInterpretJob_1482185170960 started by scheduler org.apache.zeppelin.spark.SparkInterpreter1310937891

INFO [2016-12-19 22:06:12,967] ({pool-2-thread-3} Logging.scala[logInfo]:58) – Starting job: reduce at :35

INFO [2016-12-19 22:06:12,983] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Got job 0 (reduce at :35) with 2 output partitions

INFO [2016-12-19 22:06:12,983] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Final stage: ResultStage 0 (reduce at :35)

INFO [2016-12-19 22:06:12,983] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Parents of final stage: List()

INFO [2016-12-19 22:06:12,984] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Missing parents: List()

INFO [2016-12-19 22:06:12,992] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Submitting ResultStage 0 (MapPartitionsRDD[1] at map at :31), which has no missing parents

INFO [2016-12-19 22:06:13,096] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Block broadcast_0 stored as values in memory (estimated size 1960.0 B, free 1960.0 B)

INFO [2016-12-19 22:06:13,106] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Block broadcast_0_piece0 stored as bytes in memory (estimated size 1235.0 B, free 3.1 KB)

INFO [2016-12-19 22:06:13,108] ({dispatcher-event-loop-1} Logging.scala[logInfo]:58) – Added broadcast_0_piece0 in memory on localhost:45107 (size: 1235.0 B, free: 511.1 MB)

INFO [2016-12-19 22:06:13,114] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Created broadcast 0 from broadcast at DAGScheduler.scala:1006

INFO [2016-12-19 22:06:13,117] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at :31)

INFO [2016-12-19 22:06:13,119] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Adding task set 0.0 with 2 tasks

INFO [2016-12-19 22:06:13,127] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – Added task set TaskSet_0 tasks to pool default

INFO [2016-12-19 22:06:13,168] ({dispatcher-event-loop-0} Logging.scala[logInfo]:58) – Starting task 0.0 in stage 0.0 (TID 0, localhost, partition 0,PROCESS_LOCAL, 2078 bytes)

INFO [2016-12-19 22:06:13,172] ({dispatcher-event-loop-0} Logging.scala[logInfo]:58) – Starting task 1.0 in stage 0.0 (TID 1, localhost, partition 1,PROCESS_LOCAL, 2135 bytes)

INFO [2016-12-19 22:06:13,176] ({Executor task launch worker-0} Logging.scala[logInfo]:58) – Running task 0.0 in stage 0.0 (TID 0)

INFO [2016-12-19 22:06:13,176] ({Executor task launch worker-1} Logging.scala[logInfo]:58) – Running task 1.0 in stage 0.0 (TID 1)

INFO [2016-12-19 22:06:13,209] ({Executor task launch worker-1} Logging.scala[logInfo]:58) – Finished task 1.0 in stage 0.0 (TID 1). 1031 bytes result sent to driver

INFO [2016-12-19 22:06:13,211] ({Executor task launch worker-0} Logging.scala[logInfo]:58) – Finished task 0.0 in stage 0.0 (TID 0). 1031 bytes result sent to driver

INFO [2016-12-19 22:06:13,235] ({task-result-getter-0} Logging.scala[logInfo]:58) – Finished task 1.0 in stage 0.0 (TID 1) in 48 ms on localhost (1/2)

INFO [2016-12-19 22:06:13,236] ({dag-scheduler-event-loop} Logging.scala[logInfo]:58) – ResultStage 0 (reduce at :35) finished in 0.107 s

INFO [2016-12-19 22:06:13,239] ({pool-2-thread-3} Logging.scala[logInfo]:58) – Job 0 finished: reduce at :35, took 0.271467 s

INFO [2016-12-19 22:06:13,247] ({task-result-getter-1} Logging.scala[logInfo]:58) – Finished task 0.0 in stage 0.0 (TID 0) in 98 ms on localhost (2/2)

INFO [2016-12-19 22:06:13,248] ({task-result-getter-1} Logging.scala[logInfo]:58) – Removed TaskSet 0.0, whose tasks have all completed, from pool default

INFO [2016-12-19 22:06:13,602] ({pool-2-thread-3} SchedulerFactory.java[jobFinished]:137) – Job remoteInterpretJob_1482185170960 finished by scheduler org.apache.zeppelin.spark.SparkInterpreter1310937891

I don’t see any issue in this log. This is the main Zeppelin log, please look for the *Spark* interpreter log.

Another thing that comes to my mind is your python version.

By the way, what is the Zeppelin version you’re using ?

same issure with you, any update on this?

seems the zeppelin-env.sh need extra system properties, SPARK_HOME to be set.

also make sure the py4jxxx.zip file do exits.

Hi, I want to build a customized zeppelin with version 0.62, and it compatible spark1.6.0,cassandra-spark-1.6.1, scala2.10. How to do that?

Here is the procedure to build your custom Zeppelin with custom Spark version : http://zeppelin.apache.org/docs/snapshot/install/build.html

We use DSE 5.0.4 and I have installed zeppelin 0.6.2.

On the Zeppelin interpreter, I have set below:

artifact: com.datastax.spark:spark-cassandra-connector_2.10:1.6.0

master: spark://:7077

When I run spark queries on zeppelin, it says error, but no details on the Zeppelin UI.

What other configuration is required to make Zeppelin work with DSE Spark.

Also, Zeppelin internally calls spark-submit, but with DSE, we need to be calling “dse spark-submit”….?

Yes you’re right, read my blog post carefully. In the $ZEPPELIN_HOME/bin/interpreter.sh file, there is an environment variable called $SPARK_SUBMIT. Here instead of the classical spark-submit, you should replace it with “dse spark-submit” and pass in appropriate options for security (for example pass in username/password if you have activated security in DSE)

Thanks @doanduyhai. I don’t see this point mentioned in this blog, is it some other blog. Anyway I have fixed it based on your advice.

Thanks for the quick reply.

we have a requirment for running the spark sql queries on dse-5.0.1 (Cassandra cluster with spark mode) version in Apache Zeppelin GUI.

We are using ‘zeppelin-0.7.1-bin-all’ version, Can you plz guide me how to set the $SPARK_SUBMIT in $ZEPPELIN_HOME/bin/interpreter.sh file

export SPARK_SUBMIT="${SPARK_HOME}/bin/spark-submit"toexport SPARK_SUBMIT="${DSE_HOME}/bin/dse spark-submit"Hi Doanduyhai,

Thanks for your quick response,

Apache zeppelin configured and it’s working fine, We you tell me how to create users with specific roles (Read Only).

I am seeing the conf/shiro.ini.template file and we can see default users and roles.

My requirement is to create is to create two users one for admin and another for developer (read only).

Can you please let us know how to do this.

Thanks

Nagendra

I am getting the below error, when I run a spark sql from zeppelin, but the same sql works from within the spark cluster through “dse spark-sql”. Can you pl. help.

org.apache.spark.SparkException: Job aborted due to stage failure: ResultStage 1 (take at NativeMethodAccessorImpl.java:-2) has failed the maximum allowable number of times: 4. Most recent failure reason: org.apache.spark.shuffle.FetchFailedException: /var/lib/spark/rdd/spark-e8247297-7533-4a82-969f-6cc67c474638/executor-30646c9c-84fa-4e02-b364-d1f310891f16/blockmgr-c8999413-223e-4f9c-8fda-bff4edc4188f/30/shuffle_0_0_0.index (No such file or directory)

at org.apache.spark.storage.ShuffleBlockFetcherIterator.throwFetchFailedException(ShuffleBlockFetcherIterator.scala:323)

at org.apache.spark.storage.ShuffleBlockFetcherIterator.next(ShuffleBlockFetcherIterator.scala:300)

at org.apache.spark.storage.ShuffleBlockFetcherIterator.next(ShuffleBlockFetcherIterator.scala:51)

at scala.collection.Iterator$$anon$11.next(Iterator.scala:328)

at scala.collection.Iterator$$anon$13.hasNext(Iterator.scala:371)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:327)

at org.apache.spark.util.CompletionIterator.hasNext(CompletionIterator.scala:32)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:39)

at scala.collection.Iterator$$anon$11.hasNext(Iterator.scala:327)

at org.apache.spark.sql.execution.aggregate.TungstenAggregate$$anonfun$doExecute$1$$anonfun$2.apply(TungstenAggregate.scala:88)

at org.apache.spark.sql.execution.aggregate.TungstenAggregate$$anonfun$doExecute$1$$anonfun$2.apply(TungstenAggregate.scala:86)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$20.apply(RDD.scala:710)

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$20.apply(RDD.scala:710)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270)

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:66)

at org.apache.spark.scheduler.Task.run(Task.scala:89)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:227)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.io.FileNotFoundException: /var/lib/spark/rdd/spark-e8247297-7533-4a82-969f-6cc67c474638/executor-30646c9c-84fa-4e02-b364-d1f310891f16/blockmgr-c8999413-223e-4f9c-8fda-bff4edc4188f/30/shuffle_0_0_0.index (No such file or directory)

at java.io.FileInputStream.open0(Native Method)

at java.io.FileInputStream.open(FileInputStream.java:195)

at java.io.FileInputStream.(FileInputStream.java:138)

at org.apache.spark.shuffle.IndexShuffleBlockResolver.getBlockData(IndexShuffleBlockResolver.scala:191)

at org.apache.spark.storage.BlockManager.getBlockData(BlockManager.scala:298)

at org.apache.spark.storage.ShuffleBlockFetcherIterator.fetchLocalBlocks(ShuffleBlockFetcherIterator.scala:238)

at org.apache.spark.storage.ShuffleBlockFetcherIterator.initialize(ShuffleBlockFetcherIterator.scala:269)

at org.apache.spark.storage.ShuffleBlockFetcherIterator.(ShuffleBlockFetcherIterator.scala:112)

at org.apache.spark.shuffle.BlockStoreShuffleReader.read(BlockStoreShuffleReader.scala:43)

at org.apache.spark.sql.execution.ShuffledRowRDD.compute(ShuffledRowRDD.scala:166)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306)

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270)

… 18 more

Weird, can you please check the system permission of the folder /var/lib/spark/rdd/spark-e8247297-7533-4a82-969f-6cc67c474638/ to see if you have the rights to read/write inside.

With which user are you executing Zeppelin process ?

I can see that it starts writing to /var/lib/spark/rdd/spark-e8247297-7533-4a82-969f-6cc67c474638, owner of which is cassandra user. This folder gets created when I invoke spark.sql from zeppelin. After running for a while (14% complete), it fails with this error.

I am able to run the same query from zeppelin with pyspark. The problem is only with zeppelin spark.sql

I ran zeppelin as ec2-user and also tried to run as root, but same error.

Did you see all shuffle_x_x_x.index files created or not ?

It creates shuffle files as below, then they disappear and again it tries to do the same thing for 4 times and finally errors out.

Is there some max shuffle thing that it is hitting?

[root@ip-172-31-12-201 ec2-user]# ls -lRt /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/*/shuffle*

-rw-r–r– 1 cassandra cassandra 3841 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/02/shuffle_2_8_0.data

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/06/shuffle_2_8_0.index

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/39/shuffle_2_7_0.index

-rw-r–r– 1 cassandra cassandra 3843 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/1d/shuffle_2_7_0.data

-rw-r–r– 1 cassandra cassandra 3844 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/04/shuffle_2_6_0.data

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/08/shuffle_2_6_0.index

-rw-r–r– 1 cassandra cassandra 3842 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/25/shuffle_2_5_0.data

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/37/shuffle_2_5_0.index

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/0a/shuffle_2_4_0.index

-rw-r–r– 1 cassandra cassandra 3842 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/3a/shuffle_2_4_0.data

-rw-r–r– 1 cassandra cassandra 3840 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/27/shuffle_2_3_0.data

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:42 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/35/shuffle_2_3_0.index

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:41 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/0c/shuffle_2_2_0.index

-rw-r–r– 1 cassandra cassandra 3841 Feb 7 13:41 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/38/shuffle_2_2_0.data

-rw-r–r– 1 cassandra cassandra 3842 Feb 7 13:41 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/0a/shuffle_2_0_0.data

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:41 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/32/shuffle_2_0_0.index

-rw-r–r– 1 cassandra cassandra 1608 Feb 7 13:41 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/0d/shuffle_2_1_0.index

-rw-r–r– 1 cassandra cassandra 3842 Feb 7 13:41 /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-ff2112a5-ef25-473d-a6d5-4ca456223086/blockmgr-9ed0ed18-8a1f-4564-953a-f065237e8e87/29/shuffle_2_1_0.data

Really weird. When you’re using spark-sql with DSE, did you see the same behavior (shuffle files being created then deleted again and again) ?

No, when running with spark-sql, it creates only once and I see upto 200 shuffle files created and they get deleted only when I exit spark-sql prompt.

I see below error in /var/lib/spark/worker/app-20170208052915-0013/0

ERROR 2017-02-08 05:31:05,709 Logging.scala:95 – org.apache.spark.util.SparkUncaughtExceptionHandler: Uncaught exception in thread Thread[Executor task launch worker-1,5,main]

java.lang.OutOfMemoryError: Java heap space

at java.util.PriorityQueue.(PriorityQueue.java:168) ~[na:1.8.0_121]

at org.apache.spark.util.BoundedPriorityQueue.(BoundedPriorityQueue.scala:34) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD$$anonfun$takeOrdered$1$$anonfun$29.apply(RDD.scala:1390) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD$$anonfun$takeOrdered$1$$anonfun$29.apply(RDD.scala:1388) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$20.apply(RDD.scala:710) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD$$anonfun$mapPartitions$1$$anonfun$apply$20.apply(RDD.scala:710) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:66) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.scheduler.Task.run(Task.scala:89) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:227) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_121]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_121]

at java.lang.Thread.run(Thread.java:745) [na:1.8.0_121]

ERROR 2017-02-08 05:31:05,842 Logging.scala:95 – org.apache.spark.storage.ShuffleBlockFetcherIterator: Error occurred while fetching local blocks

java.io.FileNotFoundException: /var/lib/spark/rdd/spark-3cd4577d-5a4f-406f-bb3b-ec75c4be50de/executor-262dab3c-ec6c-4b79-ab01-b9e88d6608b9/blockmgr-6bc49550-25de-4036-969a-87bac53e75cc/30/shuffle_0_0_0.index (No such file or directory)

at java.io.FileInputStream.open0(Native Method) ~[na:1.8.0_121]

at java.io.FileInputStream.open(FileInputStream.java:195) ~[na:1.8.0_121]

at java.io.FileInputStream.(FileInputStream.java:138) ~[na:1.8.0_121]

at org.apache.spark.shuffle.IndexShuffleBlockResolver.getBlockData(IndexShuffleBlockResolver.scala:191) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.storage.BlockManager.getBlockData(BlockManager.scala:298) ~[spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.storage.ShuffleBlockFetcherIterator.fetchLocalBlocks(ShuffleBlockFetcherIterator.scala:238) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.storage.ShuffleBlockFetcherIterator.initialize(ShuffleBlockFetcherIterator.scala:269) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.storage.ShuffleBlockFetcherIterator.(ShuffleBlockFetcherIterator.scala:112) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.shuffle.BlockStoreShuffleReader.read(BlockStoreShuffleReader.scala:43) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.sql.execution.ShuffledRowRDD.compute(ShuffledRowRDD.scala:166) [spark-sql_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.MapPartitionsRDD.compute(MapPartitionsRDD.scala:38) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:306) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.rdd.RDD.iterator(RDD.scala:270) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:66) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.scheduler.Task.run(Task.scala:89) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:227) [spark-core_2.10-1.6.2.2.jar:1.6.2.2]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_121]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_121]

at java.lang.Thread.run(Thread.java:745) [na:1.8.0_121]

zeppelin.spark.maxResult 99999999

We changed the above to 100 and now the issue is resolved.

The weird part is, that query’s resultset was only 10 rows.

Thanks for the update. So you mean that Spark would pre-allocate memory for the results ? Weird …

I dont understand how changing this parameter fixed the issue.

Spark only has a parameter maxResultsize, I dont think it has something like maxResult….?

In Zeppelin Interpreter Spark config, when I click on the sparkui, it eventually resolve to my server private ip (192.168.*.*) and timed out. I try to change the spark master to spark://mypulicIP:7077, but when i run a spark job, this is in the log

Connecting to master spark://10..x.x.x:7077…

Application has been killed. Reason: All masters are unresponsive! Giving up.

If I run this on my computer, everything just resolve to localhost, and it work. Not however when I deploy this to a server

Yes, you’re facing the classical issue of public/private IP address.

You need to configure your Spark to listen on your public IP

Thank you for the detailed explanation and custom builds. I’m having one problem though.

Using your custom build zeppelin-0.6.1-dse-5.0.2-5.0.3.tar.gz against DSE 5.0.5, I was able to get Zeppelin to work if I added an explicit artifact to the Spark interpreter config.

com.datastax.spark:spark-cassandra-connector_2.10:1.6.3

I can read from Cassandra and do everything I expect. However, whenever I try to use any of the DoubleRDD functions (sum, mean, stddev), I get an error. For example:

val x = sc.parallelize(Seq(10.0, 20.0, 25.0))

x.sum

x: org.apache.spark.rdd.RDD[Double] = ParallelCollectionRDD[159] at parallelize at :32

org.apache.spark.SparkException: Job aborted due to stage failure: Task 7 in stage 1.0 failed 4 times, most recent failure: Lost task 7.3 in stage 1.0 (TID 101, ip-192-168-2-112.ec2.internal): java.lang.ClassCastException: org.apache.spark.SparkContext$$anonfun$36 cannot be cast to scala.Function2

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:61)

at org.apache.spark.scheduler.Task.run(Task.scala:89)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:227)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1431)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1419)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1418)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1418)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:799)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:799)

It seems like it appears to be a version mismatch but only on the DoubleRDD functions. I can do map, groupby, join, and all of that on data loaded from Cassandra.

Update on this problem. I created a new interpreter/spark/dep/zeppelin-spark-dependencies_2.10-0.6.1.jar by injecting a new version of spark by copying /usr/share/dse/spark/lib/spark-core-2.10-1.6.2.3.jar and restarting zeppelin.

This fixed the problem and now it works. I did have to restart both Zeppelin and my DSE spark cluster for some reason before it worked.

I have updated the custom builds table with a build for DSE-5.0.4, DSE-5.0.5 and DSE-5.0.6

Could you please update the custom builds for DataStax Enterprise 5.1 (spark 2.0).

Thanks

The version matrix has been updated. Check the Google drive folder to download the correct binaries

Hello, I am using Vanilla Spark 2.1.0 with Scala 2.11 and Zeppelin 0.7 and Cassandra 3.0.13. Zeppelin is connecting to Cassandra and Spark fine. But I am having trouble integrating Spark Cassandra connector. Do you have the Assembly .jar for those version of the stack?

I am behind the company firewall. Looking forward to your reply.

Thanks

You can :

1) Check-out the Spark Cassandra connector version 2.0.2 source code from Git

2) Type “sbt assembly” to build the assembly jar

This means that you have a Scala dev environment properly set up.

Hi I am trying to connect Zeppelin .07.2 with apache spark 2.1.1 and trying to connect to data stax cassandra 5.0.8.i am successful in running the following code

import com.datastax.spark.connector._,org.apache.spark.SparkContext,org.apache.spark.SparkContext._,org.apache.spark.SparkConf

val conf = new SparkConf(true).set(“spark.cassandra.connection.host”,”x.x.x.x”).set(“spark.cassandra.auth.username”, “scheduler_user”).set(“spark.cassandra.auth.password”, “Scheduler_User”)

val sc = new SparkContext(conf)

val table1 = sc.cassandraTable(“keyspace”,”abc_info”) but as soon as i run table1.first i get java io error for local spark directory.

Cassandra Node is remote.spark and Zeppelin is local

I would need full stack trace to track down the root cause

Hello,

we have a requirement to create users with specific roles (Read Only).

I am seeing the conf/shiro.ini.template file and we can see default users and roles.

My requirement is to create is to create two users one for admin and another for developer (read only).

Can you please let us know how to do this.

Thanks

Nagendra

I think it’s more a Zeppelin conf issue, you may ask on the Zeppelin mailing list, I’m not expert with Apache Shiro conf so I can’t help much, sorry

zeppelin-0.7.1-dse-5.1.1.tar.gz this piece of shit does not work at all…

got the following error:

java.lang.AssertionError: Unknown application type

at org.apache.spark.deploy.master.DseSparkMaster.registerApplication(DseSparkMaster.scala:88) ~[dse-spark-5.1.1.jar:2.0.2.6]

at org.apache.spark.deploy.master.Master$$anonfun$receive$1.applyOrElse(Master.scala:238) ~[spark-core_2.11-2.0.2.6.jar:2.0.2.6]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) ~[scala-library-2.11.8.jar:na]

at scala.PartialFunction$OrElse.applyOrElse(PartialFunction.scala:171) ~[scala-library-2.11.8.jar:na]

at org.apache.spark.rpc.netty.Inbox$$anonfun$process$1.apply$mcV$sp(Inbox.scala:117) ~[spark-core_2.11-2.0.2.6.jar:2.0.2.6]

at org.apache.spark.rpc.netty.Inbox.safelyCall(Inbox.scala:205) [spark-core_2.11-2.0.2.6.jar:2.0.2.6]

at org.apache.spark.rpc.netty.Inbox.process(Inbox.scala:101) [spark-core_2.11-2.0.2.6.jar:2.0.2.6]

at org.apache.spark.rpc.netty.Dispatcher$MessageLoop.run(Dispatcher.scala:213) [spark-core_2.11-2.0.2.6.jar:2.0.2.6]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_131]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_131]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_131]

Hello Doanduyhai,

We are using DSE 5.0.1 and zeppelin-0.7.1 version,

We are trying to execute the below code, but it’s throwing error, can you please guide us to resolve the issue.

import org.apache.spark.sql.cassandra._

import com.datastax.spark.connector._

import com.datastax.spark.connector.cql._

import org.apache.spark.SparkContext

import org.apache.spark.SparkConf

import org.apache.spark.SparkConf

val conf = new SparkConf(true)

conf.set(“spark.cassandra.connection.host”, “*.*.*.*”)

conf.set(“spark.cassandra.auth.username”, “*****”)

conf.set(“spark.cassandra.auth.password”, “*****”)

val sc = new SparkContext(conf)

val sqlContext1 = new CassandraSQLContext(sc);

sqlContext1.setKeyspace(“keyspacename”);

val results = sqlContext1.sql(“SELECT * FROM keyspace.tablename limit 10”);

//results.collect().foreach(println);

results.take(1).foreach(println);

ERROR:

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 3, esu1l501.federated.fds): java.io.InvalidClassException: com.datastax.spark.connector.rdd.CassandraTableScanRDD; local class incompatible: stream classdesc serialVersionUID = -3323774324987049630, local class serialVersionUID = 5341884440951470345

at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:616)

at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1630)

at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1521)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1781)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:373)

at scala.collection.immutable.$colon$colon.readObject(List.scala:362)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at java.io.ObjectStreamClass.invokeReadObject(ObjectStreamClass.java:1058)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1909)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:373)

at scala.collection.immutable.$colon$colon.readObject(List.scala:362)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at java.io.ObjectStreamClass.invokeReadObject(ObjectStreamClass.java:1058)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1909)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2018)

at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:1942)

at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:1808)

at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1353)

at java.io.ObjectInputStream.readObject(ObjectInputStream.java:373)

at org.apache.spark.serializer.JavaDeserializationStream.readObject(JavaSerializer.scala:76)

at org.apache.spark.serializer.JavaSerializerInstance.deserialize(JavaSerializer.scala:115)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:61)

at org.apache.spark.scheduler.Task.run(Task.scala:89)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:214)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Driver stacktrace:

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$failJobAndIndependentStages(DAGScheduler.scala:1431)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1419)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$abortStage$1.apply(DAGScheduler.scala:1418)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

at org.apache.spark.scheduler.DAGScheduler.abortStage(DAGScheduler.scala:1418)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:799)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$handleTaskSetFailed$1.apply(DAGScheduler.scala:799)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.scheduler.DAGScheduler.handleTaskSetFailed(DAGScheduler.scala:799)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.doOnReceive(DAGScheduler.scala:1640)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1599)

at org.apache.spark.scheduler.DAGSchedulerEventProcessLoop.onReceive(DAGScheduler.scala:1588)

at org.apache.spark.util.EventLoop$$anon$1.run(EventLoop.scala:48)

at org.apache.spark.scheduler.DAGScheduler.runJob(DAGScheduler.scala:620)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:1832)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:1845)

at org.apache.spark.SparkContext.runJob(SparkContext.scala:1858)

at org.apache.spark.sql.execution.SparkPlan.executeTake(SparkPlan.scala:212)

at org.apache.spark.sql.execution.Limit.executeCollect(basicOperators.scala:165)

at org.apache.spark.sql.execution.SparkPlan.executeCollectPublic(SparkPlan.scala:174)

at org.apache.spark.sql.DataFrame$$anonfun$org$apache$spark$sql$DataFrame$$execute$1$1.apply(DataFrame.scala:1499)

at org.apache.spark.sql.DataFrame$$anonfun$org$apache$spark$sql$DataFrame$$execute$1$1.apply(DataFrame.scala:1499)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:56)

at org.apache.spark.sql.DataFrame.withNewExecutionId(DataFrame.scala:2086)

at org.apache.spark.sql.DataFrame.org$apache$spark$sql$DataFrame$$execute$1(DataFrame.scala:1498)

at org.apache.spark.sql.DataFrame.org$apache$spark$sql$DataFrame$$collect(DataFrame.scala:1505)

at org.apache.spark.sql.DataFrame$$anonfun$head$1.apply(DataFrame.scala:1375)

at org.apache.spark.sql.DataFrame$$anonfun$head$1.apply(DataFrame.scala:1374)

at org.apache.spark.sql.DataFrame.withCallback(DataFrame.scala:2099)

at org.apache.spark.sql.DataFrame.head(DataFrame.scala:1374)

at org.apache.spark.sql.DataFrame.take(DataFrame.scala:1456)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$8edb458bd4376112359e4e33be5a4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:194)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$8edb458bd4376112359e4e33be5a4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:199)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$8edb458bd4376112359e4e33be5a4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:201)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$8edb458bd4376112359e4e33be5a4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:203)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$8edb458bd4376112359e4e33be5a4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:205)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$2be621c63b9f183e85f92eaa7e12790$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:207)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$2be621c63b9f183e85f92eaa7e12790$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:209)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$2be621c63b9f183e85f92eaa7e12790$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:211)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$2be621c63b9f183e85f92eaa7e12790$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:213)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$2be621c63b9f183e85f92eaa7e12790$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:215)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$afab7c86681139df3241c999f2dafc38$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:217)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$afab7c86681139df3241c999f2dafc38$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:219)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$afab7c86681139df3241c999f2dafc38$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:221)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$afab7c86681139df3241c999f2dafc38$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:223)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$afab7c86681139df3241c999f2dafc38$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:225)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3baf9f919752f0ab1f5a31ad94af9f4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:227)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3baf9f919752f0ab1f5a31ad94af9f4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:229)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3baf9f919752f0ab1f5a31ad94af9f4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:231)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3baf9f919752f0ab1f5a31ad94af9f4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:233)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3baf9f919752f0ab1f5a31ad94af9f4$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:235)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$b968e173293ba7cd5c79f2d1143fd$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:237)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$b968e173293ba7cd5c79f2d1143fd$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:239)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$b968e173293ba7cd5c79f2d1143fd$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:241)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$b968e173293ba7cd5c79f2d1143fd$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:243)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$b968e173293ba7cd5c79f2d1143fd$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:245)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$b968e173293ba7cd5c79f2d1143fd$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:247)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$17f9c57b34a761248de8af38492ff086$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:249)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$17f9c57b34a761248de8af38492ff086$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:251)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$17f9c57b34a761248de8af38492ff086$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:253)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$17f9c57b34a761248de8af38492ff086$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:255)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$17f9c57b34a761248de8af38492ff086$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:257)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$bec1ee5c9e2e4d5af247761bdfbc3b3$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:259)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$bec1ee5c9e2e4d5af247761bdfbc3b3$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:261)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$bec1ee5c9e2e4d5af247761bdfbc3b3$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:263)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$bec1ee5c9e2e4d5af247761bdfbc3b3$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:265)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$bec1ee5c9e2e4d5af247761bdfbc3b3$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:267)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$5acc5a6ce0af8ab20753597dcc84fc0$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:269)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$5acc5a6ce0af8ab20753597dcc84fc0$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:271)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$5acc5a6ce0af8ab20753597dcc84fc0$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:273)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$5acc5a6ce0af8ab20753597dcc84fc0$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:275)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$5acc5a6ce0af8ab20753597dcc84fc0$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:277)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$33d793dde4292884a4720419646f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:279)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$33d793dde4292884a4720419646f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:281)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$33d793dde4292884a4720419646f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:283)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$33d793dde4292884a4720419646f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:285)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$33d793dde4292884a4720419646f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:287)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$33d793dde4292884a4720419646f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:289)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$725d9ae18728ec9520b65ad133e3b55$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:291)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$725d9ae18728ec9520b65ad133e3b55$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:293)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$725d9ae18728ec9520b65ad133e3b55$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:295)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$725d9ae18728ec9520b65ad133e3b55$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:297)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$725d9ae18728ec9520b65ad133e3b55$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:299)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3d99ae6e19b65c7f617b22f29b431fb$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:301)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3d99ae6e19b65c7f617b22f29b431fb$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:303)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3d99ae6e19b65c7f617b22f29b431fb$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:305)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3d99ae6e19b65c7f617b22f29b431fb$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:307)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$3d99ae6e19b65c7f617b22f29b431fb$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:309)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$ad149dbdbd963d0c9dc9b1d6f07f5e$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:311)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$ad149dbdbd963d0c9dc9b1d6f07f5e$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:313)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$ad149dbdbd963d0c9dc9b1d6f07f5e$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:315)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$ad149dbdbd963d0c9dc9b1d6f07f5e$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:317)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$ad149dbdbd963d0c9dc9b1d6f07f5e$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:319)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$6e49527b15a75f3b188beeb1837a4f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:321)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$6e49527b15a75f3b188beeb1837a4f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:323)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$6e49527b15a75f3b188beeb1837a4f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:325)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$6e49527b15a75f3b188beeb1837a4f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:327)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$6e49527b15a75f3b188beeb1837a4f1$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:329)

at $iwC$$iwC$$iwC$$iwC$$iwC$$$$93297bcd59dca476dd569cf51abed168$$$$$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC$$iwC.(:331)